As a developer you may or may not need to go into the database and write queries or design tables and indexes, or help determine configuration of your SQL Server systems. But if you do, these tips should help to make that a more pain free process.

T-SQL Tips

While much of your code may be generated, at least some of it will have to be written by hand. If you are writing some, any, or all of your T-SQL code manually, these tips will help you avoid problems.

SELECT * is not necessarily a bad thing, but it's a good idea to only move the data you really need to move and only when you really need it, in order to avoid network, disk, and memory contention on your server.

For small sets of data that are infrequently updated such as lookup values, build a method of caching them in memory on your application server rather than constantly querying them in the database.

Ensure your variables and parameters are the same data types as the columns. An implicit or explicit conversion can lead to table scans and slow performance.

Index Tips

Indexing tables is not an exact science. It requires some trial and error combined with lots of testing to get things just right. Even then, the performance metrics will change over time as you add more and more data.

You get exactly one clustered index on a table. Ensure you have it in the right place. First choice is the most frequently accessed column, which may or may not be the primary key. Second choice is a column that structures the storage in a way that helps performance. This is a must for

partitioning data.

Performance is enhanced when indexes are placed on columns used in WHERE, JOIN, ORDER BY, GROUP, and TOP. Always test to ensure that the index does help performance.

Database Design Tips

Again, you may not be delving much into this, but there are a few tips to keep in mind.

While it is possible to over-normalize a database, under-normalization is much more prevalent. This leads to repetition of data, inefficient storage, and poor performance. Data normalization is a performance tuning technique as well as a storage mechanism.

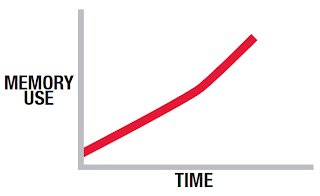

Make sure your database doesn't hold 'historic' data that is no longer used. Archive it out, either into a special 'archive' database, a reporting OLAP database, or on file. Large tables mean longer table scans and deeper indexes. This in turn can mean that locks are held for longer. Admin tasks such as Statistics updates, DBCC checks, and index builds take longer, as do backups.